Man-made reasoning (simulated intelligence) is a youthful discipline of sixty years, which is a bunch of sciences, hypotheses and strategies (counting numerical rationale, insights, probabilities, computational neurobiology, software engineering) that plans to mimic the mental capacities of a person. Started in the breath of WWII, its improvements are personally connected to those of processing and have driven PCs to perform progressively complex errands, which could beforehand just be designated to a human.

Notwithstanding, this computerization stays a long way from human knowledge in the severe sense, which makes the name open to analysis by certain specialists. A definitive phase of their exploration (a "solid" Computer based intelligence, for example the capacity to contextualize totally different specific issues in an absolutely independent manner) is in no way, shape or form practically identical to current accomplishments ("feeble" or "moderate" AIs, very proficient in their preparation field). The "solid" Artificial intelligence, which has just yet emerged in sci-fi, would require propels in essential exploration (not simply execution upgrades) to have the option to display the world overall.

Starting around 2010, nonetheless, the discipline has encountered another blast, fundamentally because of the impressive improvement in the registering force of PCs and admittance to huge amounts of information.

Guarantees, recharged, and concerns, at times fantasized, entangle a goal comprehension of the peculiarity. Brief verifiable updates can assist with arranging the discipline and illuminate current discussions.

1940-1960: Birth of computer based intelligence following robotics

The period somewhere in the range of 1940 and 1960 was firmly set apart by the combination of mechanical turns of events (of which WWII was a gas pedal) and the longing to comprehend how to unite the working of machines and natural creatures. For Norbert Wiener, a trailblazer in robotics, the point was to bring together numerical hypothesis, hardware and mechanization as "an entire hypothesis of control and correspondence, both in creatures and machines". Not long previously, a first numerical and PC model of the organic neuron (formal neuron) had been created by Warren McCulloch and Walter Pitts as soon as 1943.

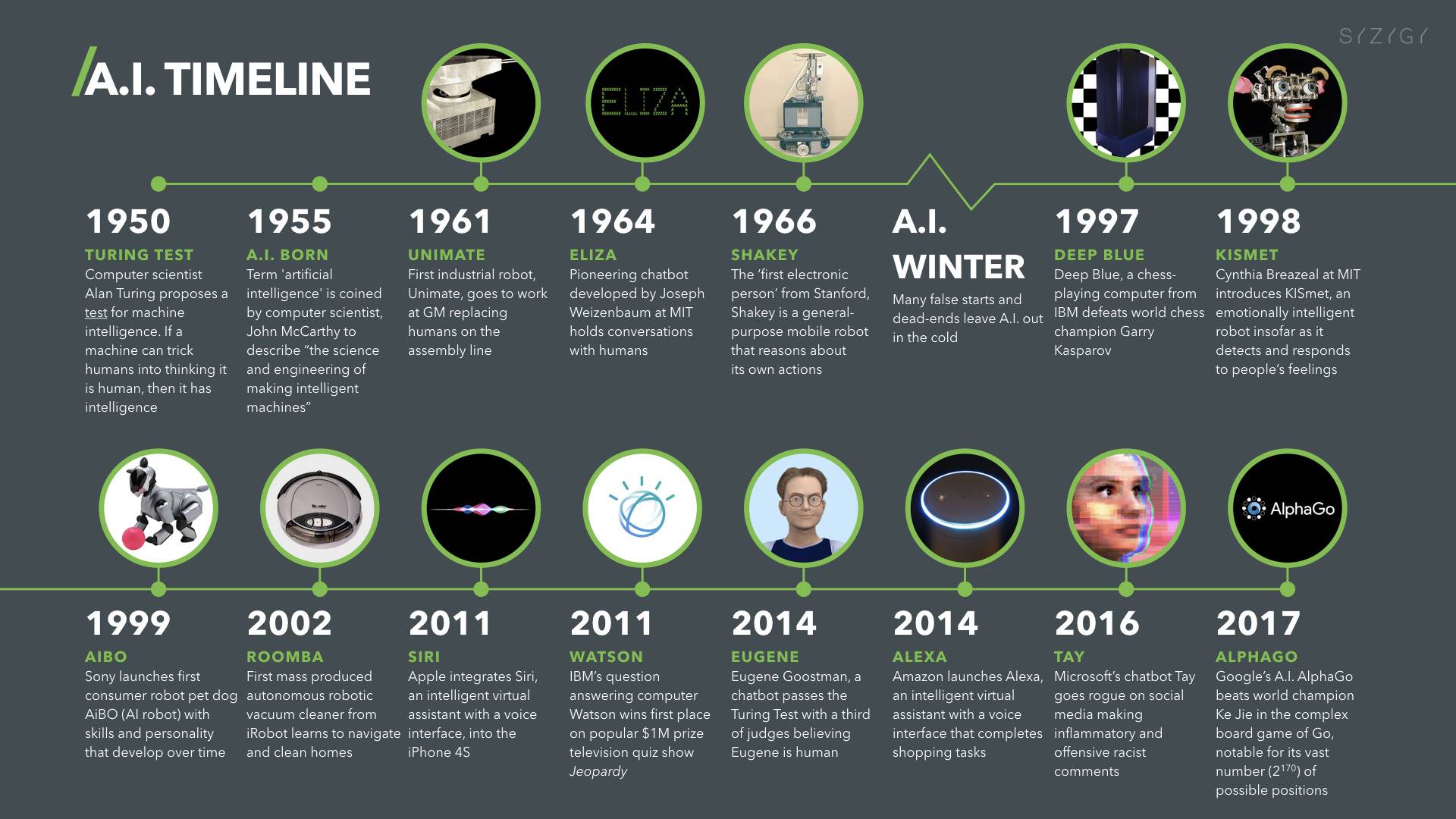

Toward the start of 1950, John Von Neumann and Alan Turing didn't make the term simulated intelligence however were the initial architects of the innovation behind it: they made the progress from PCs to nineteenth century decimal rationale (which accordingly managed values from 0 to 9) and machines to twofold rationale (which depend on Boolean variable based math, managing pretty much significant chains of 0 or 1). The two scientists in this manner formalized the engineering of our contemporary PCs and exhibited that it was a widespread machine, equipped for executing what is customized. Turing, then again, brought up the issue of the conceivable knowledge of a machine without precedent for his renowned 1950 article "Processing Hardware and Knowledge" and portrayed a "round of impersonation", where a human ought to have the option to recognize in a print exchange whether he is conversing with a man or a machine. Despite how disputable this article might be (this "Turing test" doesn't seem to fit the bill for some specialists), it will frequently be refered to as being at the wellspring of the scrutinizing of the limit between the human and the machine.

The expression "Man-made intelligence" could be credited to John McCarthy of MIT (Massachusetts Foundation of Innovation), which Marvin Minsky (Carnegie-Mellon College) characterizes as "the development of PC programs that participate in errands that are right now more agreeably performed by people since they require significant level mental cycles, for example, perceptual learning, memory association and basic thinking. The mid year 1956 meeting at Dartmouth School (supported by the Rockefeller Establishment) is viewed as the organizer behind the discipline. Episodically, it is quite significant the extraordinary progress of what was not a gathering but instead a studio. Just six individuals, including McCarthy and Minsky, had remained reliably present all through this work (which depended basically on advancements in light of formal rationale).

While innovation stayed intriguing and promising (see, for instance, the 1963 article by Reed C. Lawlor, an individual from the California Bar, named "What PCs Can Do: Examination and Expectation of Legal Choices"), the ubiquity of innovation fell back in the mid 1960s. The machines had next to no memory, making it hard to utilize a coding. Notwithstanding, there were at that point a few establishments actually present today, for example, the arrangement trees to tackle issues: the IPL, data handling language, had consequently made it conceivable to compose as soon as 1956 the LTM (rationale scholar machine) program which meant to exhibit numerical hypotheses.

Herbert Simon, financial expert and social scientist, forecasted in 1957 that the computer based intelligence would prevail with regards to beating a human at chess in the following 10 years, yet the man-made intelligence then entered a first winter. Simon's vision ended up being correct... after 30 years.

1980-1990: Master frameworks

In 1968 Stanley Kubrick coordinated the film "2001 Space Odyssey" where a PC - HAL 9000 (just a single letter away from those of IBM) sums up in itself the entire amount of moral inquiries presented by artificial intelligence: will it address an elevated degree of refinement, a great for humankind or a risk? The effect of the film will normally not be logical however it will add to advocate the subject, similarly as the sci-fi creator Philip K. Dick, who won't ever quit contemplating whether, at some point, the machines will encounter feelings.

It was with the appearance of the principal chip toward the finish of 1970 that man-made intelligence took off once more and entered the brilliant time of master frameworks.

The way was really opened at MIT in 1965 with DENDRAL (master framework represented considerable authority in sub-atomic science) and at Stanford College in 1972 with MYCIN (framework had practical experience in the analysis of blood illnesses and physician endorsed drugs). These frameworks depended on an "surmising motor," which was customized to be a legitimate reflection of human thinking. By entering information, the motor gave replies of an elevated degree of mastery.

The commitments predicted an enormous improvement yet the frenzy will fall in the future toward the finish of 1980, mid 1990. The programming of such information really required a great deal of exertion and from 200 to 300 principles, there was a "black box" impact where it was not satisfactory the way in which the machine contemplated. Improvement and upkeep in this manner turned out to be very risky and - most importantly - quicker and in numerous other less perplexing and more affordable ways were conceivable. It ought to be reviewed that during the 1990s, the term computerized reasoning had nearly become untouchable and more humble varieties had even entered college language, for example, "high level figuring".

The outcome in May 1997 of Dark Blue (IBM's master framework) at the chess game against Garry Kasparov satisfied Herbert Simon's 1957 prediction 30 years after the fact yet didn't uphold the supporting and improvement of this type of man-made intelligence. The activity of Dark Blue depended on an orderly savage power calculation, where all potential moves were assessed and weighted. The loss of the human remained extremely emblematic in the set of experiences yet Dark Blue had truly simply figured out how to treat an exceptionally restricted border (that of the standards of the chess game), extremely distant from the ability to show the intricacy of the world.

Beginning around 2010: another sprout in light of gigantic information and new processing power

Two variables make sense of the new blast in the discipline around 2010.

- Most importantly, admittance to gigantic volumes of information. To have the option to involve calculations for picture characterization and feline acknowledgment, for instance, completing examining yourself was beforehand vital. Today, a basic hunt on Google can track down millions.

- Then, at that point, the disclosure of the exceptionally high effectiveness of PC designs card processors to speed up the estimation of learning calculations. The cycle being exceptionally iterative, it could require a long time before 2010 to handle the whole example. The figuring force of these cards (able to do in excess of a thousand billion exchanges each second) has empowered extensive advancement at a restricted monetary expense (under 1000 euros for every card).

This new mechanical hardware has empowered a few critical public victories and has supported financing: in 2011, Watson, IBM's IA, will dominate the matches against 2 Peril champions! ». In 2012, Google X (Google's pursuit lab) will actually want to have a simulated intelligence perceive felines on a video. In excess of 16,000 processors have been utilized for this last errand, however the potential is phenomenal: a machine figures out how to recognize something. In 2016, AlphaGO (Google's artificial intelligence accomplished in Go games) will beat the European boss (Fan Hui) and the title holder (Lee Sedol) then herself (AlphaGo Zero). Allow us to determine that the round of Go has a combinatorics considerably more significant than chess (more than the quantity of particles in the universe) and that it is preposterous to expect to have such huge outcomes in crude strength (with respect to Dark Blue in 1997).

Where did this marvel come from? A total change in outlook from master frameworks. The methodology has become inductive: it is presently not an issue of coding rules concerning master frameworks, yet of allowing PCs to find them alone by relationship and order, based on an enormous measure of information.

Among AI procedures, profound learning appears to be the most encouraging for various applications (counting voice or picture acknowledgment). In 2003, Geoffrey Hinton (College of Toronto), Yoshua Bengio (College of Montreal) and Yann LeCun (College of New York) chose to begin an examination program to bring brain networks modern. Tests directed all the while at Microsoft, Google and IBM with the assistance of the Toronto research facility in Hinton showed that this sort of learning prevailed with regards to dividing the blunder rates for discourse acknowledgment. Comparable outcomes were accomplished by Hinton's picture acknowledgment group.

Short-term, a larger part of examination groups went to this innovation with unquestionable advantages.

No comments yet

Be the first to share your thoughts!