My relationship with Artificial Intelligence is... complicated. This is partially because there's a big difference between my personal and professional life...

Professionally - I rigorously studied Machine Learning in Graduate School (and it was a heavy component of my Master's thesis). Even now, in my 9-5 day job, I am a research contractor who works on studies that employ the use of Machine Learning.

Personally - I like to write silly little stories and poems.

So, as someone who is fascinated with both AI and writing, you would think that I would be jumping all over the opportunity to marry my personal and professional lives.

But the reality is, I think it's a waste of both the potential of AI and the potential of writers.

So if I have not lost you already, let me try and state my case.

Let's start with some definitions

To make sure that everyone is on the same page, I wanted to include a few definitions of common AI terms (skip ahead if you're familiar with the subject):

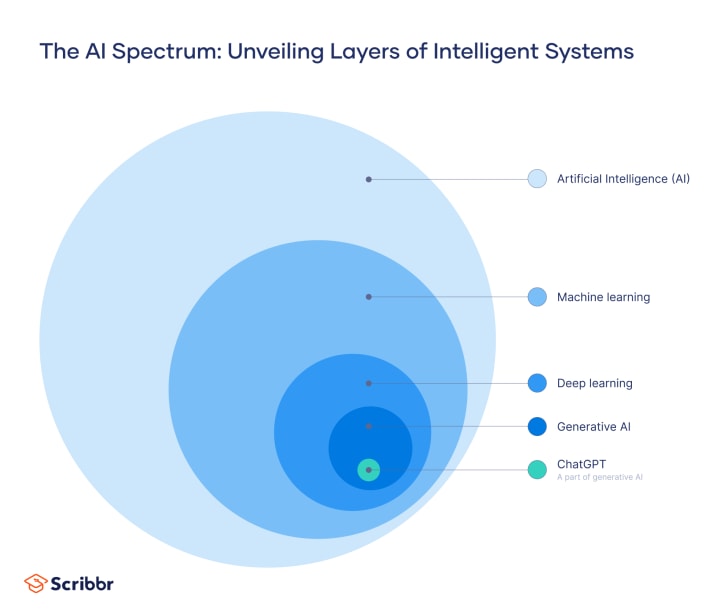

Artificial Intelligence (AI) - refers to any technique which uses technology to either mimic or surpass human functionalities, behaviors, and capabilities.

Machine Learning (ML)- a subset of artificial intelligence, and can even be described as a pathway to AI. Machine learning uses sets of rules and calculations (algorithms) to recognize data patterns.

Deep Learning - an advanced method of machine learning, which uses large models (neural networks) to learn complex data patterns and make predictions independently.

Generative AI - is a type of deep learning that can generate text, images, and other content. This is the main focus of this article, as many AI tools employ generative AI (ChatGPT, Stable Diffusion, Soundraw, Jasper, etc.)

Argument #1: The Questionable Ethics of Developing AI

There's a lot to unpack about the ethics of AI. However, for the sake of this article, I would like to limit this discussion to generative AI as it relates to creative work and creatives. Particularly, one of the most common arguments you'll hear is....

Generative AI steals work from creatives.

And... there's some truth to this. So let's discuss.

To explain a little more about machine learning: the algorithms used in ML need to be trained and tested before it is ready to be used. For this purpose, researchers need to "feed" their algorithms data until it has suitable levels of pattern recognition. The more complex the patterns, the more data you need to train a decently working model. Because generative AI is complex, it needs thousands, if not millions of data points for proper training/testing.

So to get all that data, researchers used large archives (that held the works of countless creatives) and fed it to their algorithm. This becomes problematic because many creators never gave permission for their work to be used like this. Often, their work was "donated" by archive hosts. For example, DeviantArt, a popular website for artists, did some shady things to give it's users' works over to AI. They even went so far as to obscure their policies and make artists jump through difficult hoops to attempt to get their art out of these AI usage archives.

And it gets worse because these algorithms use sketchy permissions (at best) and outright theft (at worst) to create a generative tool that still tends to plagiarize from the data it's been given. So the creative works that built these models are also getting ripped off by these models. Although this happens for all generative AI, I think this problem is best explained with AI artwork since it is easier to "see" the plagarism (then you can infer what this means for AI generated text and music).

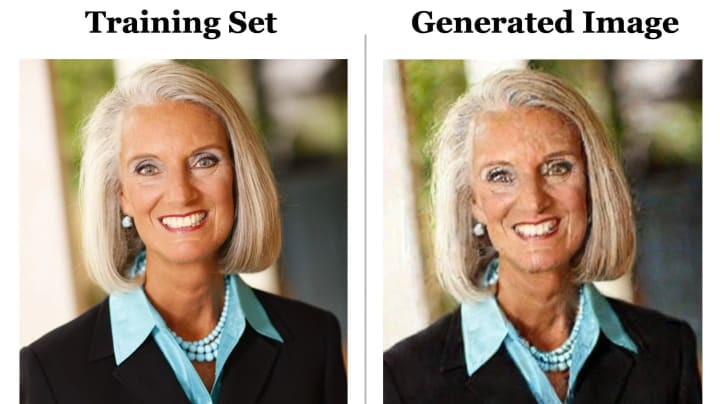

I think an interesting recent example is when the AI portrait generator, Lensa, produced portraits that still had the remains of artist signatures:

And if you are a skeptic who thinks, "well, maybe this AI is just imitating the look of a signature, please note that there is a precedent for AI generative images to produce (copyrighted images) from their data set, given the right prompt.

Research has proven that generated work from AI can directly copy and plagiarize its own training data set. So yes, any creative work given to an AI algorithm can be copied and regurgitated by that same model, effectively "stealing" it.

This raises incredible moral questions about the exploitation of creative works to develop AI tools, and the resulting theft in creative property. It's a system that has exploited many creatives, and continues to exploit creatives as it grows.

Argument #2: The Lack of Legal Protections for Users

But let's say you don't agree that AI tools exploit creatives - it hasn't hurt you, and it hasn't hurt anyone you know. So why not make your life a little easier and use these tools?

Consider this: using AI tools may make the user more prone to be exploited too.

There's a lot unfolding in the legal world as this technology has begun to raise many questions about copyright and intellectual property. While things may adapt and change, a landmark case in August 2023 set the precedent that people who use AI to generate content are unable to protect that work with copyright laws. In this case, the product in question was made entirely with AI, so there will still be a lot of legal nuance to "how much AI-assisted content" is too much to hold copyright. But this should still be a red flag for those who use AI tools. In the future, you may not be able to protect your work from theft, because the usage of AI might mean it is no longer legally considered yours.

Argument #3: Bias and the Death of Originality

Humans are biased creatures. Computers are not.

Right?

Well, what if that computer is trained by biased humans?

I talked about the training of machine learning algorithms a lot in my first argument - but there is another point that can be mentioned there. Since machine learning is a method for pattern recognition - it picks up on all types of patterns. Even ones that we didn't exactly intend to teach it. Therefore, machine learning is prone to absorb the biases of the data it is trained with.

Once again, I'll begin my point by giving you an example from the world of AI image generation, because it's easiest to see:

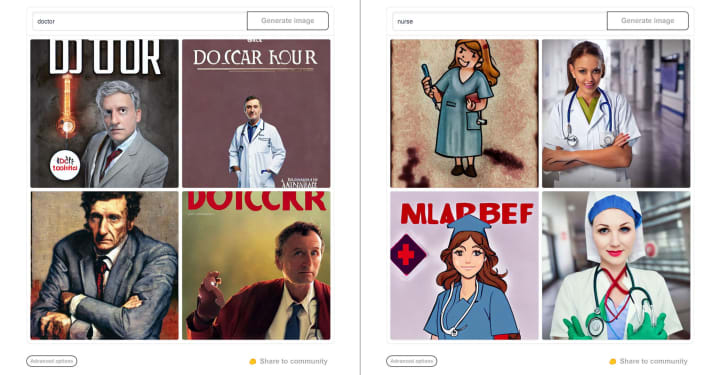

On average - when asked to imagine a doctor, what do you think most Americans imagine?

A white man.

If you ask a AI image generation to conjure a doctor... what do you think it pulls?

A white man.

And what happens if I asked the same question, but change "doctor" to "nurse?" Well... then you might start seeing white women.

So as you can see, there's a clear gender bias in the images that AI generates. And on top of that, there's a complete lack of diversity too. Someone out there might say "well this is just because most doctors are white men. It's a matter of demographics." But I'll argue that this isn't an accurate reflection of the demographics either. As of 2022, 54.2% of all doctors are women, and 37.8% of doctors are people of color. So why weren't any of those doctor images women? And why were they all white?

(It's bias.)

This bias is not limited to images. All machine learning is prone to the implicit and explicit biases of it's training material. If I am writing a book and ask AI to help me with a hospital scene, chances are, the AI is going to refer to all doctors as "he" and nurses as "she." And this would just be the tip of the iceberg. Think about it. Humans carry biases about everything - the gender of professionals, the profile of a criminal, the thing a character sees on TV....

Suddenly, AI becomes the summation of our unintended biases. At best, this is reductive of the human experience, and at worst, this enables and promotes harmful stereotypes.

This bias even extends to the "usual" ways that things appear - the "standard" way of framing a sentence, the "basic" word choices one might pick, and "typical" structure of a story or article. In essence, AI also gets biased towards the unoriginal, the cliché, and the average.

All AI can do is create a summation of the average sentence, the average character, the average story. And all of this averageness is self-contained in a biased perspective of what this world actually looks like.

And that's boring.

Some folks may argue - I wasn't planning on using AI for an entire novel, I'm just using it to fix a little writers block. It would be alright if just a sentence or two was "average."

But even so - perhaps you're selling yourself short.

Perhaps if you struggled with yourself and your words for a little longer, you could think of something brilliant instead.

Generative AI is incapable of writing anything that is inspired.

Only a human can do that.

Argument #4: The Cheapening of Human Creativity

There's another argument I've heard often against generative AI: it takes jobs away from creatives. And while this is a real and valid concern - this is actually not what I mean by this point (that in itself might be a separate conversation).

So let me deviate for a moment and address the elephant in the room: Why are writers drawn to the use of AI tools in the first place?

The initial answer is obvious: it's helpful! AI tools can assist writers with idea generation, detect errors, unclog writer's block, offer feedback and provide an initial structure for stories and articles alike. There's a lot of potential for good there.

But I have a working theory that there's a less discussed reason why writers are drawn to AI tools.

Pressure.

This can be either self-imposed pressure or environmentally-imposed pressure.

Personally-imposed pressure comes from the writer's image of themself. I've never met a writer who wasn't hard on themselves - always feeling like they weren't "enough" in some way. Not smart enough, not good enough, not accomplished enough, not writing enough, not poetic enough.... and so on.

Environmentally-imposed pressure could come from the constraint of time, a deadline, or a job. It could also come from money. There's a recent trend (especially in the younger generations) of monetizing one's hobbies, which can warp the creative experience into a commercial one.

So regardless of the reaso0n, many writers feel intense pressure to optimize their writing. And when there is an intense pressure to output constantly (and perfectly), suddenly things such as "writer's block" get a lot more stressful, because it adds to the pile of pressure.

And then AI tools swoop in and promise to take the pressure away.

And can you blame anyone for saying yes to that?

But keep following me here; perhaps instead of simply saying "AI tools can help writers," we should instead address the reasons writers have turned to AI. All situations are different, but if you are a writer and want to turn to an AI tool, I do encourage you to first ask yourself why? What is the pressure that is compelling you to turn to this tool?

Because maybe you need to hear this: that AI tool is not better than you.

You are a writer - give yourself permission to write slow, to write "bad," to write without thinking about anything else. Because at the end of the day, everything you write should be saturated with you.

Your quirks.

Your personality.

Your mistakes.

Your beautiful and wonderfully creative soul.

Writing is a uniquely human experience. Why on Earth should we give it all away to an algorithm?

Concluding Thoughts

If you have used (or do use) AI tools for any reason, my intention is not to shame you. Rather, as both a researcher and I writer, I simply wanted to provide the context that I often feel is lacking from conversations about AI. I wanted to compile my experience in both fields to support my argument.

Generative AI is not inherently bad - but it needs to be built and used responsibly.

We humans have a lot to think about when it comes to the future of AI and creativity, and that is why these conversations are so so important. Technology is changing rampantly, so we have to come together as humans and make some decisions soon. How should we respect creatives? What should copyright protect? What biases have seeped into our technology? And how should we choose to be creative in today's world?

Now you know what I think.

So what do you think?

Sources

- “Artificial Intelligence (AI) vs. Machine Learning.” CU-CAI, 3 Mar. 2022, ai.engineering.columbia.edu/ai-vs-machine-learning/.

- Barr, Kyle. “Researchers Prove AI Art Generators Can Simply Copy Existing Images.” Gizmodo, Gizmodo, 1 Feb. 2023, gizmodo.com/ai-art-generators-ai-copyright-stable-diffusion-1850060656.

- Delgado, Rodolfo. “Council Post: The Risk of Losing Unique Voices: What Is the Impact of AI on Writing?” Forbes, Forbes Magazine, 14 July 2023, www.forbes.com/sites/forbesbusinesscouncil/2023/07/11/the-risk-of-losing-unique-voices-what-is-the-impact-of-ai-on-writing/?sh=78c64bfb4db6.

- Setty, Riddhi, and Isaiah Poritz. “AI-Generated Art Lacks Copyright Protection, D.C. Court Says (1).” Bloomberg Law, 18 Aug. 2023, news.bloomberglaw.com/ip-law/ai-generated-art-lacks-copyright-protection-d-c-court-rules.

- Sterbenz, Christina. “DeviantArt’s Decision to Label AI Images Creates a Vicious Debate among Artists and Users.” ARTnews.Com, ARTnews.com, 19 July 2023, www.artnews.com/art-news/news/deviantart-artficial-intelligence-ai-images-midjourney-stabilityai-art-1234674400/.

No comments yet

Be the first to share your thoughts!