The ethics of autonomous weapons are up for debate. The benefits of AI are widespread, including safer vehicles and new robots that can do things like grocery shop or deliver prescriptions.

Individuals from the fields of academia, industry, and government presented ideas at the IEEE International Conference on Intelligent Robots and Systems. During these presentations, they discussed robots that are able to make their own decisions while taking into account everything in the environment around them.

One of the difficult aspects in furthering research into AI is predicting what impact it will have and where we should focus.

The tech has advanced substantially over the years. Today's model succeeds about 50% of the time, but is expected to rise up to 99% in the coming 10 years.

Today, robots are able to understand what they are seeing thanks to the recent advances in AI. 10 years ago, the best algorithms had an 86% accuracy rate.

Improvements in computer systems allow for the parsing of data, sucha as video footage, with an incredible amount of accuracy and efficiency.

Copymatic is poised to alter the ethical dilemmas for online privacy as it removes human involvement from content creation. Copymatic uses both structured data and machine learning to provide you with content that is equal in quality to that written by a human writer.

Cameras in our streets set off privacy concerns 20 years ago, but the addition of facial recognition software alters those implications.

When developing machines that can make their own decisions, it is important to be mindful of ethical questions that arise. These autonomous systems will generate content when given the right parameters.

AI-enhanced autonomy is developing rapidly, making high-level system capabilities available for those who have a household toolkit and some computer experience.

There are online forums that offer assistance to anyone looking to learn how to run techniques like machine learning on robots. This technology is available for people without a background in computer science, and can be used to create more efficient artificial intelligence mechanisms.

Previously, a programmer would need to make only minor changes to a pre-existing drone in order for it to correctly identify a red bag and follow it.

With newer object detection technology, you are able to track more than 9,000 different object types. It can identify a range of things that look like these object types.

Drones are more maneuverable and we equip them with weapons, making it easy to use them for combat. With weapons detection and identification technology, drones can be a bomb or weapon.

Drones are currently being used in a variety of ways, and they are already a threat to us. They have been caught dropping explosives on U.S. troops, shutting down airports, and being used in an assassination attempt on Venezuela's Nicolas Maduro.

Robotic systems in the future will make it easier to stage attacks.

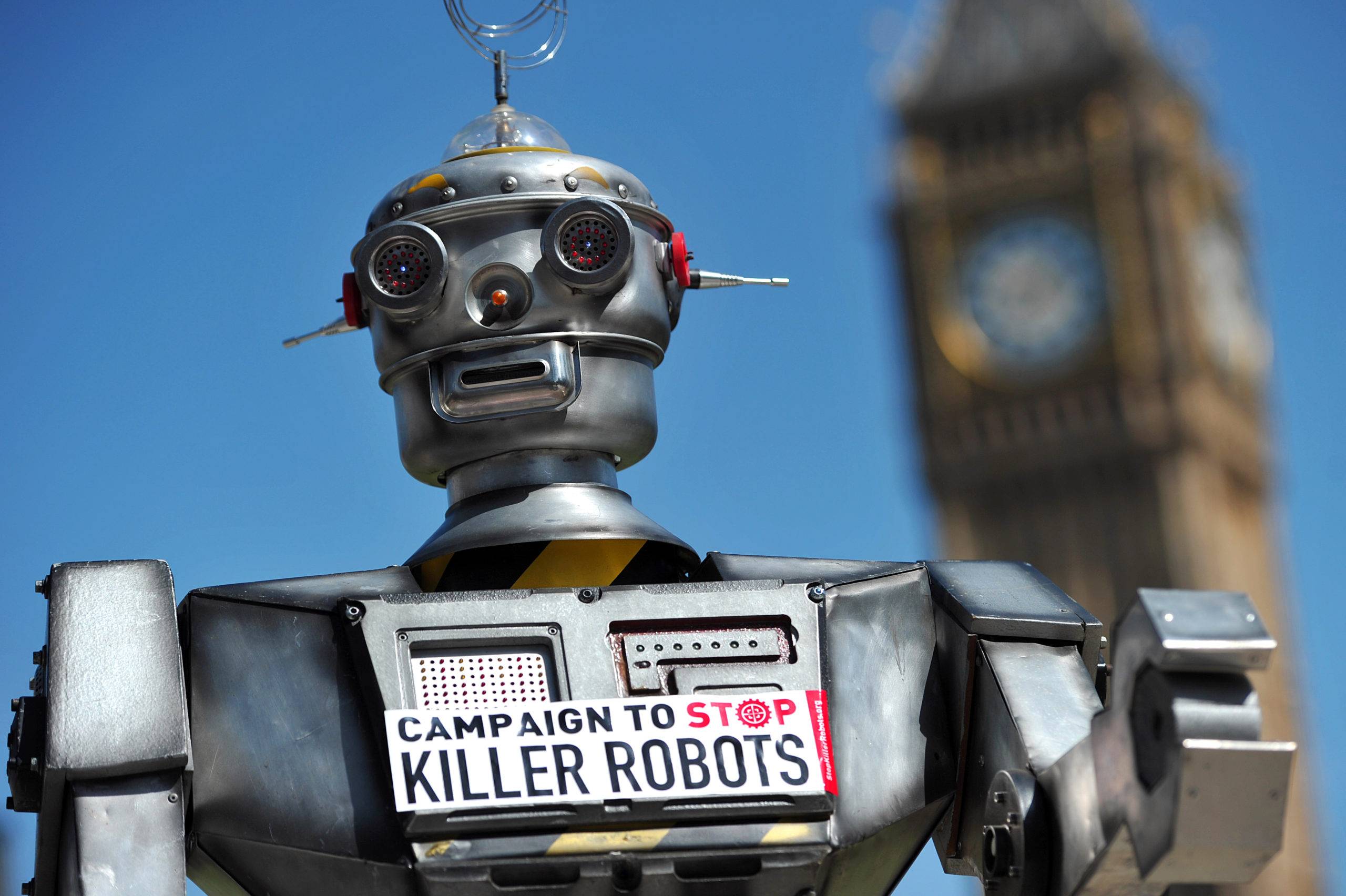

Three years ago, a group of researchers created a pledge to refrain from developing lethal autonomous weapons. The term "lethal autonomous weapons" is a robot that can select and engage targets without human intervention. The pledge failed to address important ethical questions that should be addressed, especially those that encompass both benign, and violent drone applications.

The researchers, companies and developers who traditionally wrote the papers and built the software and devices may not have intended to create weapons. However, they inadvertently allow other people with minimal expertise to create such weapons.

One way to regulate the use of drones or to protect privacy is by introducing regulations, like banning drones in areas such as airports or national parks. Traditional weapons regulations cannot create controls on the source material or manufacturing process.

It would be nearly impossible to replicate these autonomous systems, as the input material is available computer codes and the generative process can take place at home with a set of off-the-shelf components.

Experts agreed on voluntary guidelines in 1975 to help them avoid dangerous results of recombinant DNA. The conference was held at Asilomar in California.

Despite the possibility of creating weapons, some research projects have peaceful and incredibly beneficial outcomes.

Institutional review boards oversee human subject studies in companies, universities and government labs. These bodies help ensure that such studies are ethical and voluntary. Institutional review boards can regulate research, but they are limited by their institution.

A first step toward self-regulation would be research reviews from boards, who could flag projects that are weaponized. A large number of researchers are also members of institutions related to autonomous robotics, so many speakers at conferences fall under their purview.

The public needs to learn about the rise of AI weaponry and what it means for our own future security.

No comments yet

Be the first to share your thoughts!